interTwin: Co-designing and prototyping an interdisciplinary Digital Twin Engine

interTwin seeks to co-design and implement a prototype of an open-source Digital Twin Engine (DTE), built upon open standards, facilitating seamless integration with application-specific Digital Twins (DTs). This innovative platform, rooted in a co-designed interoperability framework and the conceptual model of a DT for research, known as the DTE blueprint architecture, aims to simplify and accelerate the development of complex application-specific DTs.

Overview

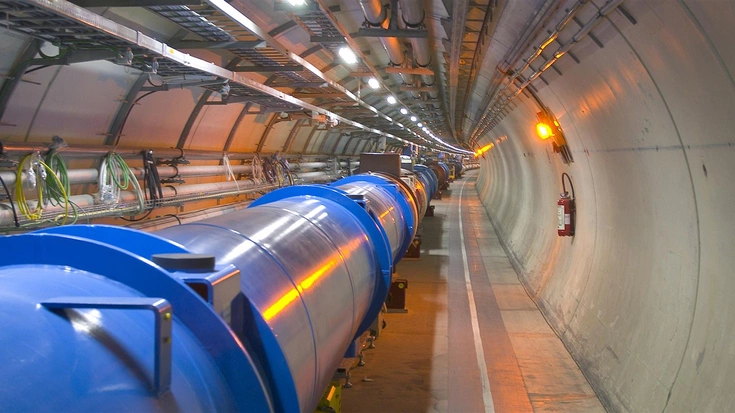

interTwin develops and implements an open-source DTE that offers generic and customized software components for modeling and simulation, promoting interdisciplinary collaboration. The DTE blueprint architecture, guided by open standards, aims to create a common approach applicable across scientific disciplines. Use cases span high-energy physics, radio astronomy, climate research, and environmental monitoring. The project leverages expertise from European research infrastructures, fostering the validation of technology across facilities and enhancing accessibility. InterTwin aligns with initiatives like Destination Earth, EOSC, EuroGEO, and EU data spaces for continuous development and collaboration.

Highlights in 2025

In 2025, within interTwin we extended our itwinai-based integration from Juelich (JUWELS system) and Vega to additional EuroHPC systems: LUMI with AMD GPUs and Deucalion with Fujitsu A64FX CPUs, accessed via CERN openlab. On these platforms we benchmarked distributed AI workloads, studying strong and weak scaling through epoch times, per-iteration timing and detailed profiles of communication overhead between workers. We complemented this with measurements of GPU energy use, GPU and CPU utilisation, using them as signals to guide performance tuning and configuration choices. Hyper-parameter optimisation was exercised at scale to optimize the accuracy of AI-based digital twins. Finally, we demonstrated cloud–HPC integration using interLink, offloading distributed training jobs from a cloud environment to Vega HPC and performing scaling tests in the interTwin context to validate portability of our AI workflow stack.

Next Steps

The interTwin project has finished in 2025.Following the completion of the interTwin planned activities in 2025, the project has delivered valuable technical insights. The results will inform future R&D directions, projects, and provide a foundation for new initiatives and continued collaborations.

Project Coordinator

Tiziana Ferrari (EGI)

CERN Technical Team

Matteo Bunino, Maria Girone, Eric Wulff, Kalliopi Tsolaki, Jarl Sondre Saether, Anna Lappe, Linus Maximilian Eickhoff, Enrique Garcia Garcia, Xavier Espinal, Sofia Vallecorsa

Consortium Members

CERFACS, CERN, CESNET, CMCC, CNRS, CSIC, Cyfronet, Deltare, DESY, ECMWF, LIP, MPG, PSNC, TU Wien, UHEI, UNITN, UPV, VU, WWU, EGI Foundation, EODC, ETH Zurich, EURAC, FZJ, GRNET, INFN, JSI/IZUM, KIT, KBFI

Publications & Presentations

M. Girone & M. Bunino, itwinai: Enabling Scalable AI Workflows on HPC for Digital Twins in Science (P29 poster). Presented at PASC25 – Platform for Advanced Scientific Computing Conference, Brugg, Switzerland, 2025.

More publications and presentations

M. Girone & M. Bunino, Enabling Lattice QCD Normalizing Flows in HPC Infrastructures (P110 poster). Presented at PASC25 – Platform for Advanced Scientific Computing Conference, Brugg, Switzerland, 2025.

M. Bunino, M. Girone, A. Lappe, J. Sondre Saether, Co-designing and Prototyping Interdisciplinary Digital Twins (15 min). Presented at 2025 CERN openlab Technical Workshop, CERN, Geneva, 5 March 2025.

M. Bunino & D. Ciangottini, Testing AI Containers for Digital Twins in Science: A Cloud-HPC Workflow. Presented at KubeCon + CloudNativeCon Europe 2025, 4 April 2025.

M. Girone & M. Bunino, Shaping the Future of Scientific Digital Twins: Tales from Physics, Climate Research, and Data Centre Operations (BoF session). Presented at ISC High Performance 2025, Hamburg, 11 June 2025.

interTwin is funded by the European Union Grant Agreement Number 101058386.