ODISSEE

ODISSEE aims to use AI to cope with the data deluge from SKAO and CERN’s HL-LHC by making their computing workflows more energy-efficient and sustainable. The project co-designs modular software and hardware building blocks, benchmarks emerging architectures, and improves workflow portability. This is an EU funded project that federates efforts from 3 pan-European ESFRI infrastructures (HL-LHC, SKAO and SLICESRI) in physical sciences, Big Data, and in the computing continuum supporting flagship instruments that will maintain and strengthen European leadership in high-energy physics and astronomy.

Overview

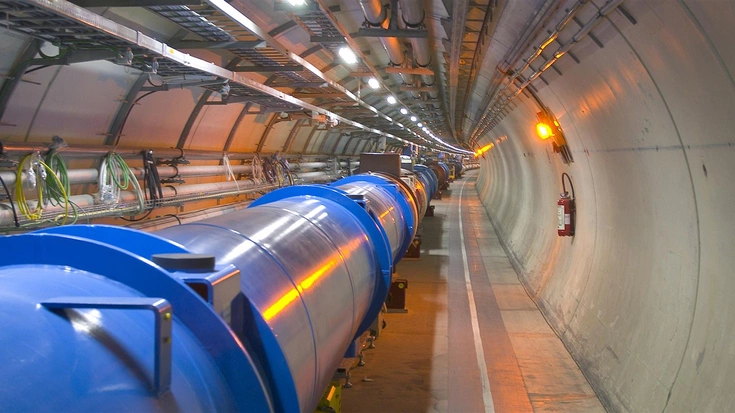

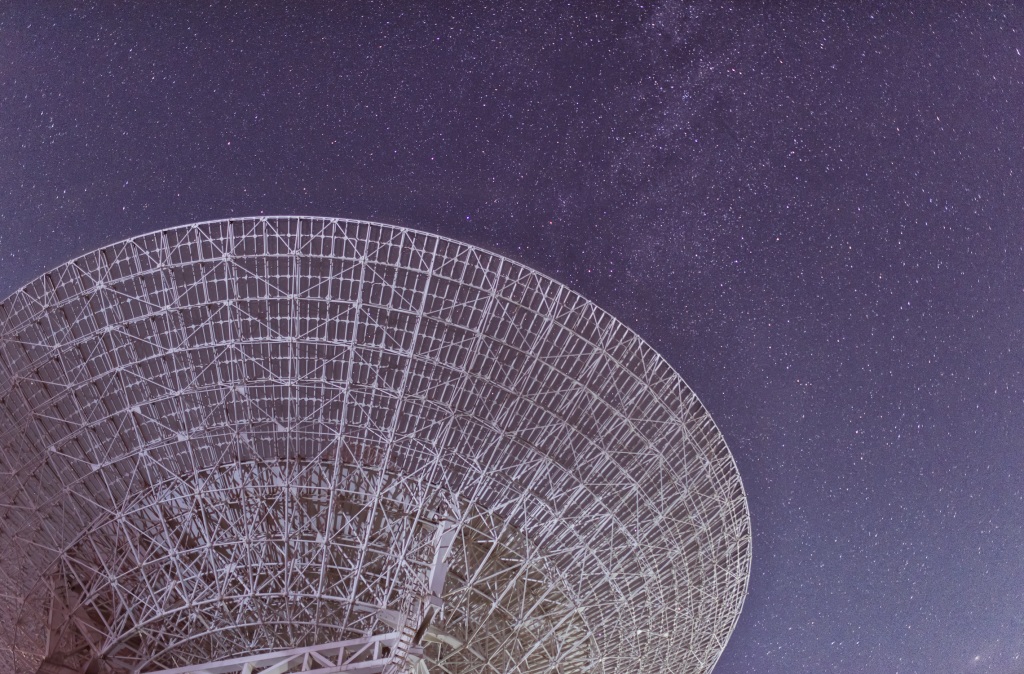

Next-generation instruments like the HL-LHC and SKAO will produce exabyte-scale data and significantly increase the power demand of computing and data-centre infrastructures. To cope with this challenge, the ODISSEE project aims to revolutionise the way we process, analyse and store data. ODISSEE is developing new technologies using AI to process and filter only the relevant data into the data stream on the fly. This approach will enable scientists to build more complex, yet reliable, physical models, whether at micro or astronomical scale.

At the same time, the project also focuses on redesigning hardware and software solutions that cover the entire data stream continuum, from generation to analysis. The primary objective is to create tools that are energy efficient, adaptable and flexible for the future. This will involve the development of a reconfigurable network of diverse processing elements driven by AI.

Highlights in 2025

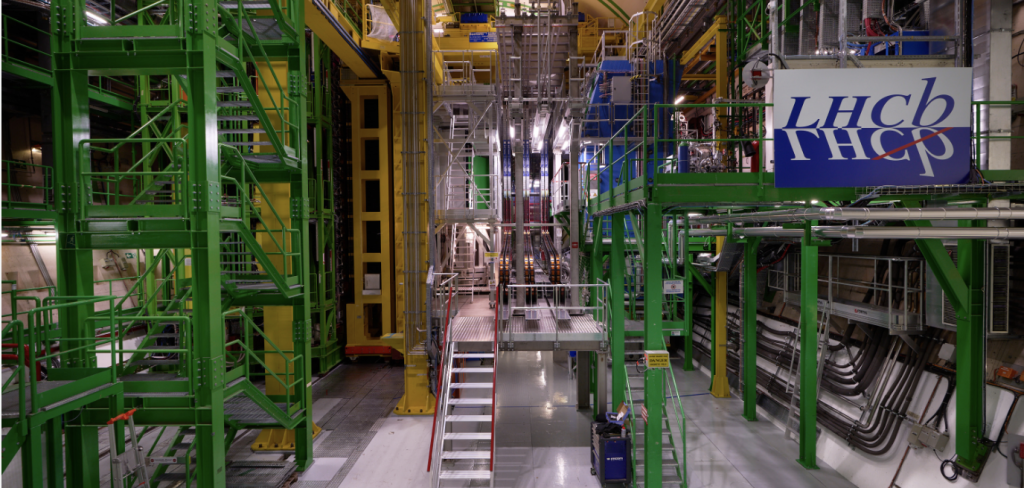

In 2025 the focus was on preparing the digital-twin data-centre use case for later technical work. We started gathering requirements from the main scientific communities, including CERN’s LHCb experiment and CERN data centre. This work will prepare target scenarios for energy-aware operations and predictive maintenance, the relevant timescales, and acceptable trade-offs between energy savings, system utilisation and reliability.

We performed a survey of existing tools, frameworks and telemetry sources that could underpin the data-centre digital twin. This included studying solutions such as the Energy Aware Runtime (EAR) and related monitoring stacks, and mapping how they could expose power and performance metrics for both compute nodes and cooling equipment. Taken together, these activities provide a common vocabulary and technical baseline, and prepare the ground for implementing and validating a first digital-twin prototype on the LHCb data centre in the next project phase.

Next Steps

As a next step, we will implement and validate a first digital-twin prototype on the LHCb data centre. This will include integrating selected telemetry sources, instantiating power and reliability models for compute and cooling equipment, and running pilot optimisation and predictive-maintenance studies on a limited set of services. Results will guide further refinement and potential adoption at additional CERN and partner sites.

Project Coordinator

Damien Gratadour (Observatoire de Paris)

CERN Technical Team

Pierfrancesco Cifra, Francesco Sborzacchi, Niko Neufeld, Maria Girone, Matteo Bunino

Consortium Members

Observatoire de Paris, CNRS, CERN, SKAO, ASTRON, INRIA, BSC, CSCS, Simula, SURF, GENCI, EAR, SiPearl, NextSilicon, NEOVIA, EPFL

EU-funded Project

This project has received funding from the European Union’s Horizon Europe research and innovation program under grant agreement N°101188332.